HY-Motion 1.0: The New Text-to-Motion AI for 3D Animation

- AI News

- 6 min read

- January 7, 2026

- Harish Prajapat

What Is HY-Motion 1.0?

HY-Motion 1.0 is an AI model developed by Tencent that generates realistic 3D human motion using simple text descriptions. Instead of manually animating characters or using motion-capture hardware, creators can describe an action in plain language and let the model handle the movement generation.

The model focuses entirely on how the human body moves, not how a character looks. This makes HY-Motion fast, flexible, and easy to combine with other creative tools like MagicShot, where visuals and scenes are handled separately.

What HY-Motion 1.0 Generates

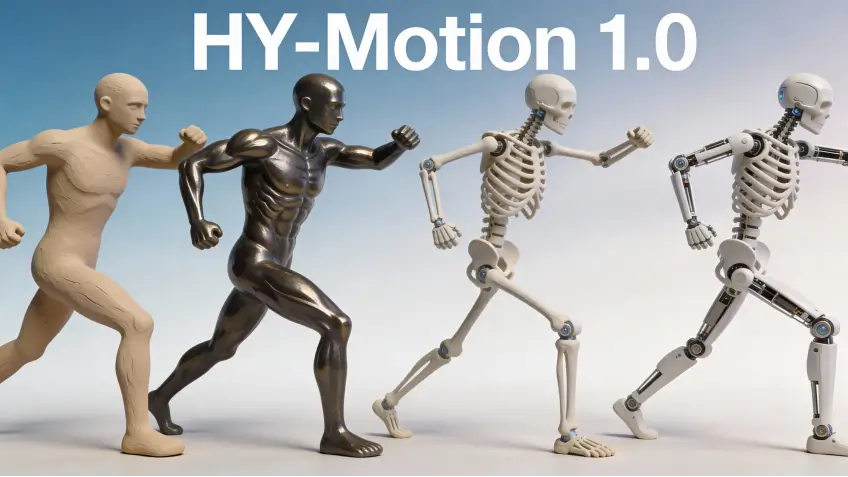

HY-Motion 1.0 produces 3D skeletal motion data that represents human movement over time. This motion can be applied to different 3D characters, regardless of their style or design.

In practical terms, the output includes:

-

Natural full-body movement

-

Time-based animation sequences

-

Motion that can be reused and retargeted

It does not generate character appearance, facial expressions, clothing, environments, or camera movement. Its role is strictly motion generation, which keeps the results clean and production-ready.

How the Model Works in Practice

The workflow is simple and easy to understand. A user writes a short text prompt describing a human action. HY-Motion reads the text, understands the movement being described, and predicts how a real human body would perform that action. The model then outputs a 3D motion sequence using a skeleton structure.

Because the motion is skeleton-based, the same animation can be reused across different characters. This is why HY-Motion is often shown with multiple character types performing the exact same movement.

Why HY-Motion 1.0 Is Useful

Human motion is one of the hardest parts of animation. HY-Motion 1.0 removes much of that complexity by allowing creators to generate motion without animation skills or special equipment.

It is especially useful because it:

-

Eliminates the need for motion-capture setups

-

Speeds up animation testing and prototyping

-

Produces consistent and natural movement

-

Works well with modern AI visual tools

For MagicShot users, this means faster creation with more lifelike results.

HY-Motion 1.0 Model Versions

Tencent released two versions of HY-Motion 1.0 to support different hardware setups.

HY-Motion 1.0 (Standard) delivers higher motion quality and smoother movement, but it requires stronger GPU resources.

HY-Motion 1.0 Lite is smaller and more accessible. It runs faster, needs less hardware power, and is recommended for beginners or quick experiments.

Both versions use the same prompts and follow the same workflow.

Writing Prompts That Give Better Results

HY-Motion works best with short, clear, action-focused prompts. The model understands physical movement better than visual details.

Good prompts usually describe:

-

One person

-

A clear body action

-

Simple movement steps

For example, “A person walks forward, stops, and raises one arm” works much better than long descriptive sentences about appearance or emotion. Prompts involving multiple people, animals, camera movement, or clothing details are not supported and often produce poor results.

Current Limitations to Know

HY-Motion 1.0 is powerful, but it has clear limits. It does not support facial animation, object interaction, multi-person scenes, looping motion, or environment awareness. It works best for single-person, full-body motion demonstrations.

Understanding these limits helps creators use the model more effectively.

MagicShot also lets you turn simple images into a 3D object that can be used for animation and creative projects. You can convert an image into a basic 3D object, apply AI-generated motion, and transform static visuals into dynamic 3D content with minimal effort.

Frequently Asked Questions

It is an AI model that turns text into 3D human motion.

Tencent’s Hunyuan AI research team.

Yes, especially the Lite version.

No, only motion data.

Short, clear descriptions of single-person actions.

It is best for testing, prototyping, and creative workflows.