Apple May Bring Google Gemini to Siri: What It Means for iPhone Users

- AI News

- 5 min read

- January 15, 2026

- Harish Prajapat

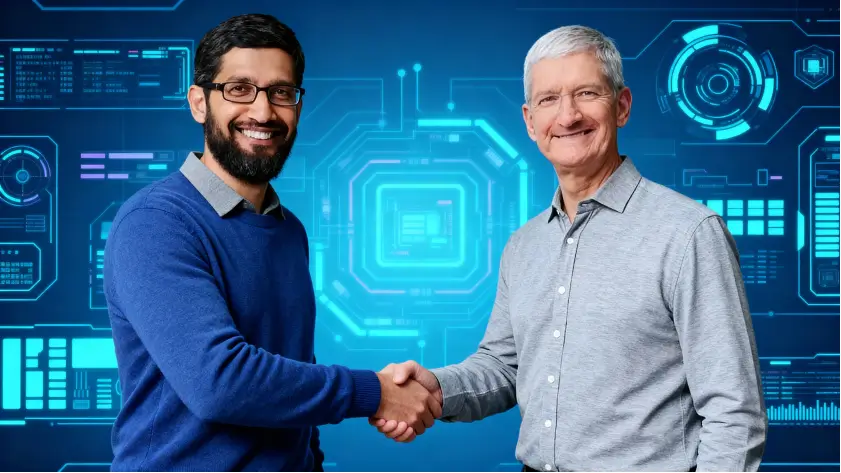

Apple and Google are long-time rivals, but they also have a history of quiet cooperation. Google Search has been the default search engine on iPhones for years, and now a similar pattern may be emerging with AI.

Reports and public comments from Apple executives suggest that Siri’s next evolution includes the ability to route certain requests to powerful external models. Google’s Gemini is one of the AI systems Apple has openly acknowledged as a potential option.

This would not turn Siri into Gemini. Instead, Siri remains the interface, while Gemini handles specific tasks behind the scenes.

Why Apple Is Open to External AI Models

Apple’s strategy with AI is noticeably different from its competitors. Rather than building one massive cloud-based model for everything, Apple is focusing on a hybrid approach.

Apple Intelligence handles many tasks on-device, where privacy and speed matter most. For more complex reasoning, creative writing, or knowledge-heavy queries, Apple prefers to offer users access to best-in-class models instead of forcing a single solution.

This approach allows Apple to move faster without compromising its core values.

How Gemini Could Work Inside Siri

If Gemini becomes part of Siri’s workflow, users likely won’t interact with it directly. Siri would act as a traffic controller.

Here’s how the experience is expected to work:

- A user asks Siri a question

- Siri determines whether Apple Intelligence can handle it

- If needed, the request is sent to Gemini

- The response returns through Siri, not a separate app

From the user’s perspective, Siri simply feels smarter.

Privacy and Control Still Belong to Apple

One of the biggest questions around this collaboration is privacy. Apple has been clear that any external AI integration must meet strict requirements.

Apple controls:

- When a request is sent externally

- What data is shared

- How results are presented

Requests are expected to be anonymized and opt-in, with users informed when an external model is used. This mirrors Apple’s approach with other AI integrations.

Why Google Wins From This Deal

For Google, Gemini integration into Siri would be a major distribution win. Siri is used by hundreds of millions of people worldwide, many of whom never open a separate AI app.

Being part of Siri puts Gemini exactly where users already are. It also positions Google as an infrastructure provider for AI, not just a consumer-facing chatbot.

What This Means for OpenAI and Others

Apple has already confirmed support for multiple AI models. That means Gemini would not be exclusive.

This creates a marketplace-like approach where:

- Siri stays neutral

- Users get better answers

- Apple avoids locking into a single vendor

Over time, Apple could rotate or expand supported models based on performance, cost, and user trust.

What’s Confirmed and What’s Not

It’s important to separate facts from speculation.

Confirmed:

- Apple plans to let Siri use external AI models

- Apple has publicly mentioned Gemini as a candidate

- Privacy and user control are core requirements

Not confirmed:

- Exact launch timing

- Whether Gemini will be available at launch

- How many regions will support it initially

Why This Matters

This potential collaboration signals a bigger shift in AI strategy.

The future of assistants may not belong to a single model. Instead, it belongs to platforms that intelligently route tasks to the right system at the right time.

Apple isn’t trying to win the AI arms race alone. It’s trying to win on experience.

Frequently Asked Questions

No. Siri remains the interface while Gemini may handle some complex requests.

Apple has not announced a release date.

Apple has indicated support for multiple models over time.

Apple says all external AI use will be transparent and controlled.

Apple has acknowledged discussions, but full details are still pending.